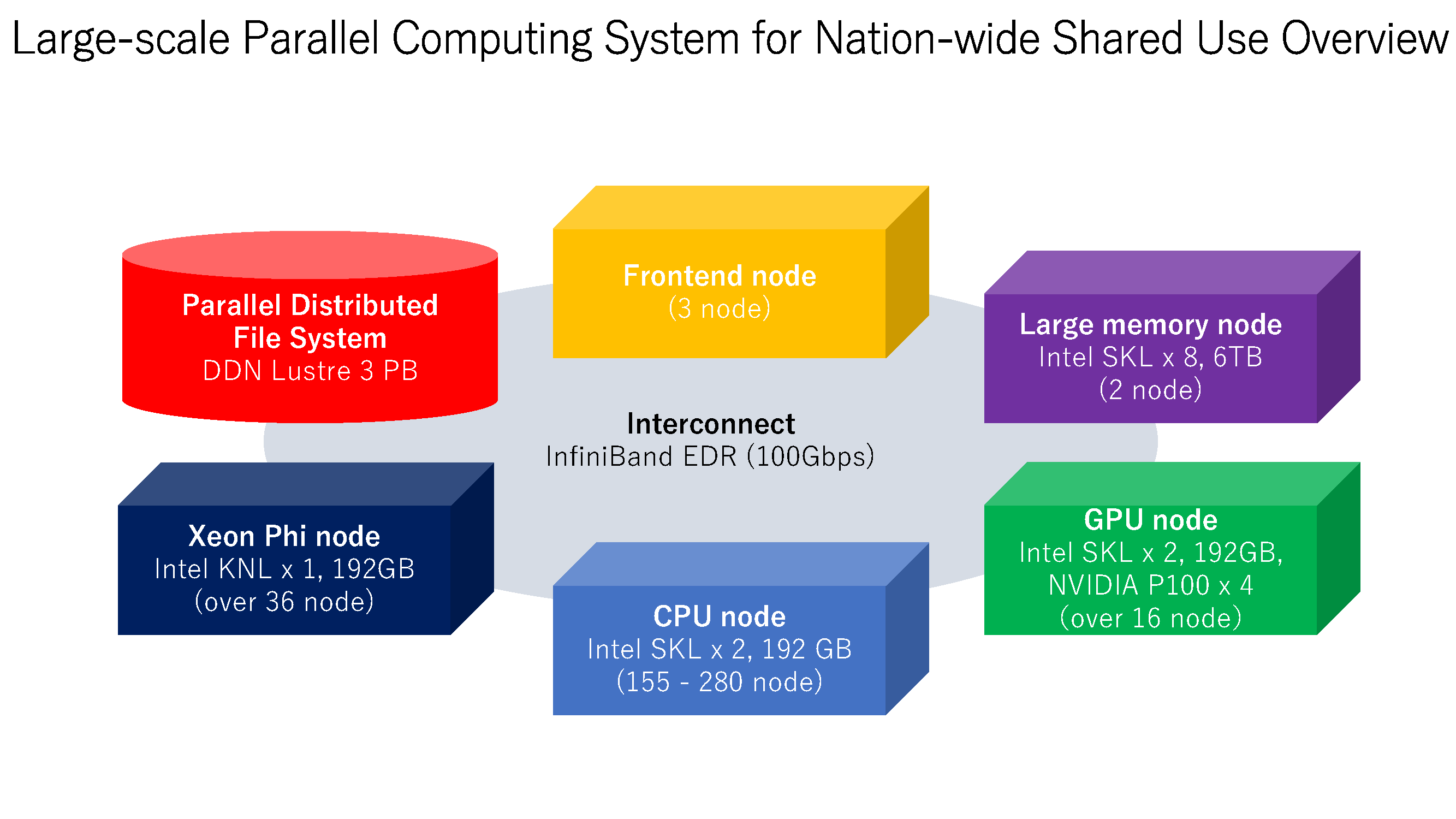

[newsflash] The configuration of "Large-scale Parallel Computing System for Nation-wide Shared Use" system

The configuration of "Large-scale Parallel Computing System for Nation-wide Shared Use" system is following:

| Large-scale Parallel Computing System for Nation-wide Shared Use (codename: octopus) | ||

|---|---|---|

| Theoretical Computing Speed | approximately 1.446 PFLOPS | |

| Compute Node | CPU node | Intel CPU(Skylake)x2, 192GB memory |

| GPU node | Intel CPU(Skylake)x2, NVIDIA GPU(P100)x4, 192GB memory | |

| XeonPhi node | Intel XeonPhi(Knights Landing), 192GB memory | |

| Large memory node | Intel CPU(Skylake)x8, 6TB memory | |

| Interconecct | InfiniBand EDR | |

| Strage | DDN Lustre(approximately 3 PB) | |

* This is the newsflash on June 1, 2017. Therefore, there may a bit of a discrepancy about performance values. Thank you for your understanding.

We will announce on the following page, if there is any progress about contract or development.

Octopus

Posted : June 01,2017